A VMware vulnerability with a severity rating of 9.8 out of 10 is under active exploitation. At least one reliable exploit has gone public, and there have been successful attempts in the wild to compromise servers that run the vulnerable software.

Product release with grey background indicates it is unsupported. Product Release. General Availability. End of General Support. End of Technical Guidance. End of Availability. Lifecycle Policy. Show or hide columns. VMware Workstation 9.0.2 Download Now! VMware Workstation provides a seamless way to access all of the virtual machines you need, regardless of where they are running. Important: This video is dated, but it still contains relevant and valuable information. VMware Dynamic Environment Manager (formerly User Environment Manager) 9.3 includes a new integration with VMware App Volumes, further improving the user experience for non-persistent VDI. This feature enables easy configuration to manage and persist an Outlook OST file on an App Volumes Writable Volume. I have VMware Tools 11.2.5 and there is also this annoying Problem with the VMware, Inc. System 9.8.16.0. 3 Server with Server 2016 Standard without this issue; 1 Server with Server 2016 Standard with this issue (screenshot taken from) WSUS; VMware Tools 11.2.5; VMware 6.7 U3 latest Lenovo Build. VMware Workstation 9.0.2 is a old version of VMware Workstation Pro, a powerful software who virtualise PC in your PC. It contains VMware Workstation Player, a conversion of VMware Worksation Pro for family. Note: This software is not free. This is a key for VMware Workstation 9.0.2: HY4KG-6KK47-CZCC1-Y8CQ4-13DK1. 2020-04-14 14:12:58.

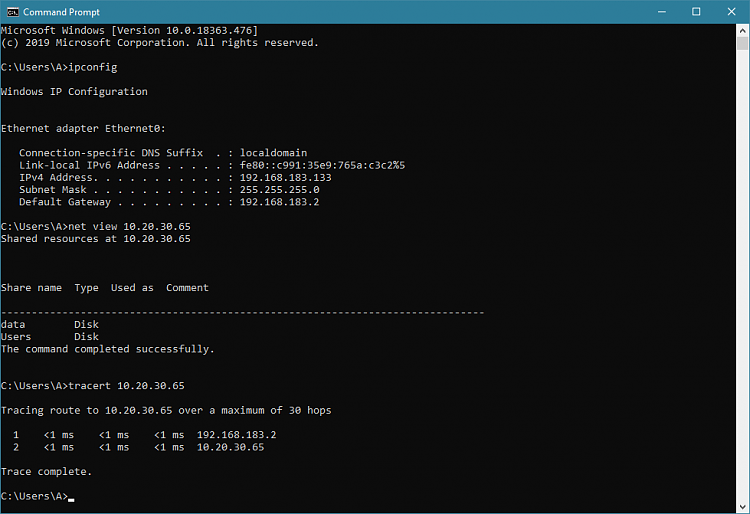

The vulnerability, tracked as CVE-2021-21985, resides in the vCenter Server, a tool for managing virtualization in large data centers. A VMware advisory published last week said vCenter machines using default configurations have a bug that, in many networks, allows for the execution of malicious code when the machines are reachable on a port that is exposed to the Internet.Code execution, no authentication required

On Wednesday, a researcher published proof-of-concept code that exploits the flaw. A fellow researcher who asked not to be named said the exploit works reliably and that little additional work is needed to use the code for malicious purposes. It can be reproduced using five requests from cURL, a command-line tool that transfers data using HTTP, HTTPS, IMAP, and other common Internet protocols.

Another researcher who tweeted about the published exploit told me he was able to modify it to gain remote code execution with a single mouse click.

Quick confirm that this is the real PoC of CVE-2021-21985 👍 pic.twitter.com/jsXKFf1lZZ

— Janggggg (@testanull) June 3, 2021“It will get code execution in the target machine without any authentication mechanism,” the researcher said.

I haz web shell

Researcher Kevin Beaumont, meanwhile, said on Friday that one of his honeypots—meaning an Internet-connected server running out-of-date software so the researcher can monitor active scanning and exploitation—began seeing scanning by remote systems searching for vulnerable servers.

About 35 minutes later, he tweeted, “Oh, one of my honeypots got popped with CVE-2021-21985 while I was working, I haz web shell (surprised it’s not a coin miner).”

AdvertisementOh, one of my honeypots got popped with CVE-2021-21985 while I was working, I haz webshell (surprised it’s not a coin miner).

— Kevin Beaumont (@GossiTheDog) June 4, 2021A web shell is a command-line tool that hackers use after successfully gaining code execution on vulnerable machines. Once installed, attackers anywhere in the world have essentially the same control that legitimate administrators have.

Troy Mursch of Bad Packets reported on Thursday that his honeypot had also started receiving scans. On Friday, the scans were continuing, he said. A few hours after this post went live, the Cybersecurity and Infrastructure Security Administration released an advisory.

It said: 'CISA is aware of the likelihood that cyber threat actors are attempting to exploit CVE-2021-21985, a remote code execution vulnerability in VMware vCenter Server and VMware Cloud Foundation. Although patches were made available on May 25, 2021, unpatched systems remain an attractive target and attackers can exploit this vulnerability to take control of an unpatched system.'

Under barrage

The in-the-wild activity is the latest headache for administrators who were already under barrage by malicious exploits of other serious vulnerabilities. Since the beginning of the year, various apps used in large organizations have come under attack. In many cases, the vulnerabilities have been zero-days, exploits that were being used before companies issued a patch.

Attacks included Pulse Secure VPN exploits targeting federal agencies and defense contractors, successful exploits of a code-execution flaw in the BIG-IP line of server appliances sold by Seattle-based F5 Networks, the compromise of Sonicwall firewalls, the use of zero-days in Microsoft Exchange to compromise tens of thousands of organizations in the US, and the exploitation of organizations running versions of the Fortinet VPN that hadn’t been updated.Like all of the exploited products above, vCenter resides in potentially vulnerable parts of large organizations’ networks. Once attackers gain control of the machines, it’s often only a matter of time until they can move to parts of the network that allow for the installation of espionage malware or ransomware.

Admins responsible for vCenter machines that have yet to patch CVE-2021-21985 should install the update immediately if possible. It wouldn’t be surprising to see attack volumes crescendo by Monday.

Post updated to add CISA advisory.

VMware Telco Cloud Automation 1.9.5 | 05 AUG 2021 | ISO Build 18379906 | R147 Check for additions and updates to these release notes. |

What's New

Airgap Support: True support for a complete air-gapped environment.

You can now create an air-gapped server that serves as a repository for all binaries and libraries that are required by VMware Telco Cloud Automation and VMware Tanzu Kubernetes Grid for performing end-to-end operations.

In an air-gapped environment, you can now:

- Deploy VMware Tanzu Kubernetes Grid clusters.

- Perform late-binding operations.

- Perform license upgrades.

The airgap setup requires packages to be placed in a private air-gapped repository. To keep the packages up to date and to manage the repository, it must have access to the Internet.

CSI Zoning Support

VMware vSphere-CSI is now multi-zone and region aware (based on VMware vCenter tags).

In a default vanilla Tanzu Kubernetes Grid (TKG) cluster, you cannot use vSphere CSI, if there is no shared storage across the whole cluster. Thus, creating a limitation for a Stretched cluster deployment. As a result, VMware Telco Cloud Automation 1.9.5 supports a zoning feature. The feature to enable zoning allows an operator to dynamically provision Persistent Volumes through vSphere CSI, even in a cluster with no shared storage available.

Intel Mount Bryce Acceleration Card Support

VMware Telco Cloud Automation 1.9.5 now supports Intel’s Mount Bryce driver. Mount Bryce exposes accelerator functionality as a virtual function (VF), so that multiple DUs can use these VFs for Forward Error Correction (FEC) offload. FEC in the physical layer provides hardware acceleration by liberating compute resources for RAN (both CU & DU) workloads. The FEC acceleration device utilizes the SR-IOV feature of PCIe to support up to 16 VFs.

VMware Telco Cloud Automation 1.9.5 automates the configuration of the VF so RAN ISVs can consume it.

Cache Allocation Technology (CAT) Support

VMware Telco Cloud Automation 1.9.5 addresses certain DU requirements through its CAT support. CAT support allocates resource capacity in the Last Level Cache (LLC) based on the class of service. This capability prevents a single DU from consuming all available resources. As such, with CAT the LLC can be divided between multiple DUs. VMware Telco Cloud Automation 1.9.5 automates the configuration at the ESXi layer to provide CAT support to RAN ISVs, to enable them to deploy multiple DUs.

VMware Telco Cloud Automation 1.9.5 includes support for:

Vmware 94fbr

- VMware Tanzu Kubernetes Grid version 1.3.1

- VMware NSX-T Data Center 3.1.2

Vmware 9 License Key

Important Notes

vSphere CSI Multi-Zone Support Notes

- Multi-zone feature works on the workload cluster created from the newly created Management cluster.

- Multi-zone DAY-1 configuration is not supported on an existing Kubernetes cluster that is upgraded from previous VMware Telco Cloud Automation versions. It is also not supported on a newly created workload cluster from a Management cluster that is upgraded from a previous VMware Telco Cloud Automation version.

- When you Upgrade from a previous version with multi-zone enabled manually, the configuration is reserved. However, the VMware Telco Cloud Automation UI or API does not support disabling and enabling the multi-zone feature.

Airgap Notes

- The air-gap solution in 1.9.5 only works in a restricted Internet-accessing environment with DNS service, so that the clusters can resolve the address of the air-gap server.

- Configure the workload cluster with air-gap settings that are similar to its related Management cluster. VMware Telco Cloud Automation does not validate these settings. However, workload cluster deployment fails if the air-gap settings on the Workload cluster and the Management cluster are different.

- The air-gap server works only on a newly deployed Kubernetes cluster, or on a Management cluster that is deployed on a newly deployed Kubernetes cluster. The air-gap server does not work on existing Kubernetes clusters that are upgraded from previous VMware Telco Cloud Automation versions. It also does not work on workload clusters deployed on existing Management clusters that are upgraded from previous VMware Telco Cloud Automation versions.

- To deploy a Management cluster, the VMware Telco Cloud Automation Control Plane must be able to access the air-gap server that is set to the Management cluster.

Helm addon version Notes

Vmware 902

- VMware Telco Cloud Automation CaaS supports only Helm v2.17 and v3.5.

- VMware Telco Cloud Automation API to install any 2.x Helm version is now obsolete. However, the VMware Telco Cloud Automation UI does not contain any changes.

Kubernetes Version 1.17 Discontinuation

- VMware Telco Cloud Automation, along with VMware Tanzu Kubernetes Grid, have discontinued support for Kubernetes Management and Workload Clusters with version 1.17.

- It is mandatory to upgrade any 1.17 Kubernetes clusters to a newer version after upgrading to VMware Telco Cloud Automation 1.9.5.

Download Photon BYOI Templates for VMware Tanzu Kubernetes Grid

To download Photon BYOI templates, perform the following steps:

- Go to the VMware Customer Connect site at www.my.vmware.com.

- From the top menu, select Products and Accounts > All Products.

- In the All Downloads page, scroll down to VMware Telco Cloud Automation and click View Download Components.

- In the Download VMware Telco Cloud Automation page, ensure that the version selected is 1.9.

- Against VMware Telco Cloud Automation 1.9.5, click Go To Downloads.

- Click the Drivers & Tools tab.

- Expand the category VMware Telco Cloud Automation 1.9.5 Photon BYOI Templates for TKG.

- Against Photon BYOI Templates for VMware Tanzu Kubernetes Grid 1.3.1, click Go To Downloads.

- In the Download Product page, download the appropriate Photon BYOI template.

Resolved Issues

CNF Lifecycle Management

Workflow outputs are not displayed for CNF upgrades.

If a CNF contains pre or post CNF upgrade workflows, the output of these workflows are not displayed within the CNF upgrade task.

A multi-chart CNF instantiation might fail for the second chart when the same namespace is used for all the helm charts.

This issue is noticed when instantiating a CNF that has multiple charts.

VIM

Re-registration of vCenter VIM ends up with the VIM status as 'Unavailable'.

When you deregister and reregister a vCenter VIM that has some CaaS clusters deployed on it previously, the VIM changes to the 'Unavailable' state permanently.

Known Issues

Infrastructure Automation

The CSI tagging is applicable only for newly added hosts in a Cell Site Group.

The VMware vSphere server Container Storage Interface (CSI) tagging is applicable only on the newly added hosts for a Cell Site Group (CSG).

This restriction is not applicable to the hosts already added to a Cell Site Group.

CSI tagging is not enabled by default for an air-gapped environment with the standalone mode of activation

The CSI tagging is not enabled by default for an Air-gapped environment that has a standalone mode of license activation.

Contact VMware support team for enabling the CSI tagging feature.

No option to edit or remove of CSI tagging after you enable the CSI tagging.

Once you set the CSI tags a domain, you cannot make further modifications or remove the tags.

User needs to edit and save domain information, to enable the CSI tagging feature upon resync, in the case of the brownfield deployment scenario.

To use the CSI feature in the case of a Brownfield deployment, the user needs to edit and save the domain information, and then perform a resync operation for that particular domain.

CSI tagging is not supported for predeployed domains.

CSI tagging feature is not applicable for predeployed domains.

However, VMware Telco Cloud Automation does not modify the existing tags if already set in the underlying VMware vSphere server for the predeployed domains.

Failure to provision domain when 4 PNICs are configured.

Telco Cloud Automation fails to provision domains when 4 PNICs are configured on a DVS and edge cluster deployment is enabled.

No option to delete a compute cluster that is 'ENABLED' but in the 'failed' state.

If the compute cluster is 'ENABLED' but in 'failed' state and the user attempts to delete the cluster, the same gets deleted from Telco Cloud Automation inventory leaving behind the cluster resources intact. The user has to manually delete cluster resources by logging into VMware Center server and VMware NSX-T server.

Manually delete cluster resources by logging into VMware Center server and VMware NSX-T server.

A config spec JSON from one Telco Cloud Automation setup does not work in other Telco Cloud Automation setups.

As part of the security requirement, the downloaded config spec JSON file does not include appliance passwords. Customers cannot use the downloaded config spec JSON from one Telco Cloud Automation setup and then try to upload that to another Telco Cloud Automation.

Need to regenerate the self-signed certificate on all the hosts.

Cloudbuilder 4.2 has a behavior change, that requires the user to regenerate the self-signed certificate on all the hosts. For details, see Cloud Builder.

Need to support add vsan nfs image URL in the images list

Before 1.9.5, the Telco Cloud Automation obtained the vSAN NFS OVF from https://download3.vmware.com. However, with the introduction of airgap server, the Telco Cloud Automation cannot obtain the vSAN NFS OVF from https://download3.vmware.com

- Provide the link of vSAN NFS OVF file URL under the Images section of Configuration tab in Infrastructure Automation. Provide only the OVF url, else the installation may fail.

- Check the Manual Approach and upload the required images to the image server.. For details on required files, see vSAN Manual Approach.

Cluster Automation

CaaS Cluster creation fails when the cluster is connected to an NSX segment that spans multiple DVS

Cluster creation fails when it is connected to an NSX segment that spans across multiple DVS and across the same Transport Zone.

- Create a Network Folder for each DVS which belongs to the same Transport Zone in vCenter.

- Move the DVS to the newly created Network Folder.

Failed to upgrade Tanzu Kubernetes Grid (TKG) cluster

Failed to upgrade TKG cluster from Kubernetes version 1.19.1 to Kubernetges version 1.20.5 from Telco Cloud Automation 1.9.5 in some of the old machine and CAPI/CAPV cannot delete the VMware vSphere VM.

- SSH into tca-cp VM

- Switch to management cluster context

- Use the command '

kubectl get machine -A' to list the machine to delete. - Use the command '

kubectl delete vspherevm k8-mgmt-cluster-np1-769b4484c5-pq4p5 -n tkg-system --force --grace-period 0' to manually delete the vSphere VM - Retry from Telco Cloud Automation UI

Intermittently configure add-on is failing while deploying the cluster

Intermittently configure add-on is failing while deploying the cluster - 'Error: failed to generate and apply NodeConfig CR'

If the config add-on partially fails, edit cluster and re-add harbor on the cluster.

After a scale-in operation on the Kubernetes cluster, stale worker nodes are still shown in the UI.

After performing a scale-in operation on the Kubernetes cluster, some stale worker nodes are visible within the VMware Telco Cloud Automation CaaS Infrastructure UI.

This is a temporary sync delay. VMware Telco Cloud Automation displays the correct data automatically after 2 hours.

Node Customization

Need to enable PCI passthrough on PF0 when the E810 card is configured with multiple PF groups.

To use the PTP PHC services, enable PCI passthrough on PF0 when the E810 card is configured with multiple PF groups.

To use the Mt. Bryce, install the VIB ibbd-pf driver and bbdev configuration tool on VMware ESXi server, which enables the support for the ACC100 adapter in the VMware ESXi server.

Catalog Management

'Save as new Catalog' not working properly

'Save as new Catalog' replacing all the string and substring that matches the old nodeTemplate name with the new catalog name

CNF Lifecycle Management

CNF instantiation fails with errors during grant validations for certain Helm charts.

Grant requests fail with the error: 'Helm API failed'. This is seen in Helm charts containing a dotted parameterization notation in the YAML files. More specifically, this issue is observed in instances where there is a space between ‘

{{’ or ‘}}’ and the text between them. For example: '{{ .Values.forconfig.alertmanager_HOST }}'Edit the Helm chart to remove the offending space and then perform CNF instantiation. For example: '

{{.Values.forconfig.alertmanager_HOST}}'

Vmware 9080

User Interface

Vmware 9.8

Product Documentation and Support Center links will not work if the client browser does not have access to Internet.

In a complete air-gapped environment that does not have access to the Internet, the Product Documentation and Support Center links will not work.

Ensure that the browser or the client machine has Internet access.