The syslog logging driver routes logs to a Syslog server, such as NXLog, via UDP, TCP, SSL/TLS, or a Unix domain socket. See the Syslog logging driver guide on Docker.com for more information. To use the syslog driver as the default logging driver, set the log-driver and log-opt keys to appropriate values in the daemon.json file, which is located in /etc/docker/ on Linux hosts or C: ProgramData docker config daemon.json on Windows Server. For more about configuring Docker using daemon.json, see daemon.json. The Docker logging drivers capture all the output from a container’s stdout/stderr, and can send a container’s logs directly to most major logging solutions (syslog, Logstash, gelf, fluentd). As an added benefit, by making the logging implementation a runtime choice for the container, it provides flexibility to use a simpler implementation. Docker Syslog driver can block container deployment and lose logs when Syslog server is not reachable Using Docker Syslog driver with TCP or TLS is a reliable way to deliver logs. However, the Syslog logging driver requires an established TCP connection to the Syslog server when a container starts up.

Estimated reading time: 5 minutes

The syslog logging driver routes logs to a syslog server. The syslog protocol usesa raw string as the log message and supports a limited set of metadata. The syslogmessage must be formatted in a specific way to be valid. From a valid message, thereceiver can extract the following information:

- priority: the logging level, such as

debug,warning,error,info. - timestamp: when the event occurred.

- hostname: where the event happened.

- facility: which subsystem logged the message, such as

mailorkernel. - process name and process ID (PID): The name and ID of the process that generated the log.

The format is defined in RFC 5424 and Docker’s syslog driver implements theABNF reference in the following way:

Usage

To use the syslog driver as the default logging driver, set the log-driverand log-opt keys to appropriate values in the daemon.json file, which islocated in /etc/docker/ on Linux hosts orC:ProgramDatadockerconfigdaemon.json on Windows Server. For more aboutconfiguring Docker using daemon.json, seedaemon.json.

The following example sets the log driver to syslog and sets thesyslog-address option. The syslog-address options supports both UDP and TCP;this example uses UDP.

Restart Docker for the changes to take effect.

Note

log-opts configuration options in the daemon.json configuration file mustbe provided as strings. Numeric and boolean values (such as the value forsyslog-tls-skip-verify) must therefore be enclosed in quotes (').

You can set the logging driver for a specific container by using the--log-driver flag to docker container create or docker run:

Options

The following logging options are supported as options for the syslog loggingdriver. They can be set as defaults in the daemon.json, by adding them askey-value pairs to the log-opts JSON array. They can also be set on a givencontainer by adding a --log-opt <key>=<value> flag for each option whenstarting the container.

| Option | Description | Example value |

|---|---|---|

syslog-address | The address of an external syslog server. The URI specifier may be [tcp|udp|tcp+tls]://host:port, unix://path, or unixgram://path. If the transport is tcp, udp, or tcp+tls, the default port is 514. | --log-opt syslog-address=tcp+tls://192.168.1.3:514, --log-opt syslog-address=unix:///tmp/syslog.sock |

syslog-facility | The syslog facility to use. Can be the number or name for any valid syslog facility. See the syslog documentation. | --log-opt syslog-facility=daemon |

syslog-tls-ca-cert | The absolute path to the trust certificates signed by the CA. Ignored if the address protocol is not tcp+tls. | --log-opt syslog-tls-ca-cert=/etc/ca-certificates/custom/ca.pem |

syslog-tls-cert | The absolute path to the TLS certificate file. Ignored if the address protocol is not tcp+tls. | --log-opt syslog-tls-cert=/etc/ca-certificates/custom/cert.pem |

syslog-tls-key | The absolute path to the TLS key file. Ignored if the address protocol is not tcp+tls. | --log-opt syslog-tls-key=/etc/ca-certificates/custom/key.pem |

syslog-tls-skip-verify | If set to true, TLS verification is skipped when connecting to the syslog daemon. Defaults to false. Ignored if the address protocol is not tcp+tls. | --log-opt syslog-tls-skip-verify=true |

tag | A string that is appended to the APP-NAME in the syslog message. By default, Docker uses the first 12 characters of the container ID to tag log messages. Refer to the log tag option documentation for customizing the log tag format. | --log-opt tag=mailer |

syslog-format | The syslog message format to use. If not specified the local UNIX syslog format is used, without a specified hostname. Specify rfc3164 for the RFC-3164 compatible format, rfc5424 for RFC-5424 compatible format, or rfc5424micro for RFC-5424 compatible format with microsecond timestamp resolution. | --log-opt syslog-format=rfc5424micro |

labels | Applies when starting the Docker daemon. A comma-separated list of logging-related labels this daemon accepts. Used for advanced log tag options. | --log-opt labels=production_status,geo |

labels-regex | Applies when starting the Docker daemon. Similar to and compatible with labels. A regular expression to match logging-related labels. Used for advanced log tag options. | --log-opt labels-regex=^(production_status|geo) |

env | Applies when starting the Docker daemon. A comma-separated list of logging-related environment variables this daemon accepts. Used for advanced log tag options. | --log-opt env=os,customer |

env-regex | Applies when starting the Docker daemon. Similar to and compatible with env. A regular expression to match logging-related environment variables. Used for advanced log tag options. | --log-opt env-regex=^(os|customer) |

When you’re troubleshooting an application, the first instinct is to read the logs to understand what’s happening. When working with containers, though, we need to consider the ephemeral nature of a container. By the time you find out there’s a problem with your application, the container and the logs might be gone. Fortunately, Docker has a native way of reading logs from a container.

However, we live in a world where we won’t have only one container running, and our systems have multiple dependencies. We need to have a centralized location for our logs to have a better context of the problem. Also, if the container has gone away, we can read the logs collected while it was running.

In this post, I’ll provide a quick overview of why having a syslog server might be a good option. Then, I’ll show you how you can integrate syslog and Docker to improve your troubleshooting strategy through logs.

Reading Logs From a Container

Docker comes with a native command, docker logs, to read logs from a container. The following command runs a container that simply prints a text indefinitely.

If you want to read the logs from that container, you need either the name or the ID of the container. Then, you can read the logs with the docker logs CONTAINER, and you can add the -f flag to see logs live. See below for an example of the commands you’d usually run:

That’s initially good because you can read the logs from a container. However, if the container goes away, you can’t read its logs anymore using the logs command. This command only works while the container is running. We need a better way to collect container logs, so we don’t lose them when the container stops—containers come and go all the time.

There are many ways you can process and retain Docker container logs. But this time, I’ll use a syslog server. You’ll notice, though, there’s going to be a missing piece once logs are there—I’ll come back to this later.

Why Syslog for Logging in Docker?

Using a syslog server might not be the first option you think of when you think about collecting container logs. However, it’s a cheap and straightforward strategy to help you get started. It’s likely you already have a logging architecture using syslog and the missing piece was how to collect logs from Docker.

For instance, you could use a central syslog server as an aggregation location and then process all logs for removal of sensitive or private information, aggregating data, or forwarding logs to other places. Additionally, for performance reasons at the networking level, you could decide to have a syslog server locally as a buffer to network traffic. Or, perhaps you’re even planning on removing syslog soon, but you need to process Docker logs in the meantime. Nonetheless, troubleshooting without proper logs is challenging, so syslog provides a quick and easy way to start.

Docker Syslog Logging And Troubleshooting | Loggly

Configuring a Syslog Server

I’m using an Ubuntu 18.04 server in AWS, which already comes with rsyslog (the syslog server) installed. Otherwise, you’ll install it with the sudo apt install -y rsyslog command if you’re using Ubuntu. Once you have rsyslog, it’s good practice to create a configuration file specifically for the Docker daemon. We’d like to have a dedicated location for these types of logs, including the Docker daemon and container logs.

First, create a configuration file to collect all logs from the Docker daemon. Create a file at “/etc/rsyslog.d/49-docker-daemon.conf” using sudo with the following content:

Now, create a configuration file to collect logs from containers in a separate file. Create a file at “/etc/rsyslog.d/48-docker-containers.conf” using sudo with the following content:

And let’s restart the rsyslog server to confirm everything is working:

Configuring Docker for Sending Logs to Syslog

Once rsyslog is ready to process the Docker logs, let’s configure the Docker daemon to send the logs to syslog.

Modify (or create it, as I did) the Docker daemon configuration (“/etc/docker/daemon.json”) with sudo permissions, and add the following content:

In this case, I’m including a tag with the value dev to identify the logs I’m collecting in this server. Also, I’m using the local syslog server (through sockets), but this could be a remote server as well. For instance, using the syslog-address property, you could have something like this:

You might use the local syslog server to avoid losing logs for network connectivity and to forward logs to a centralized server.

Now, let’s restart the Docker daemon:

Docker Syslog Server

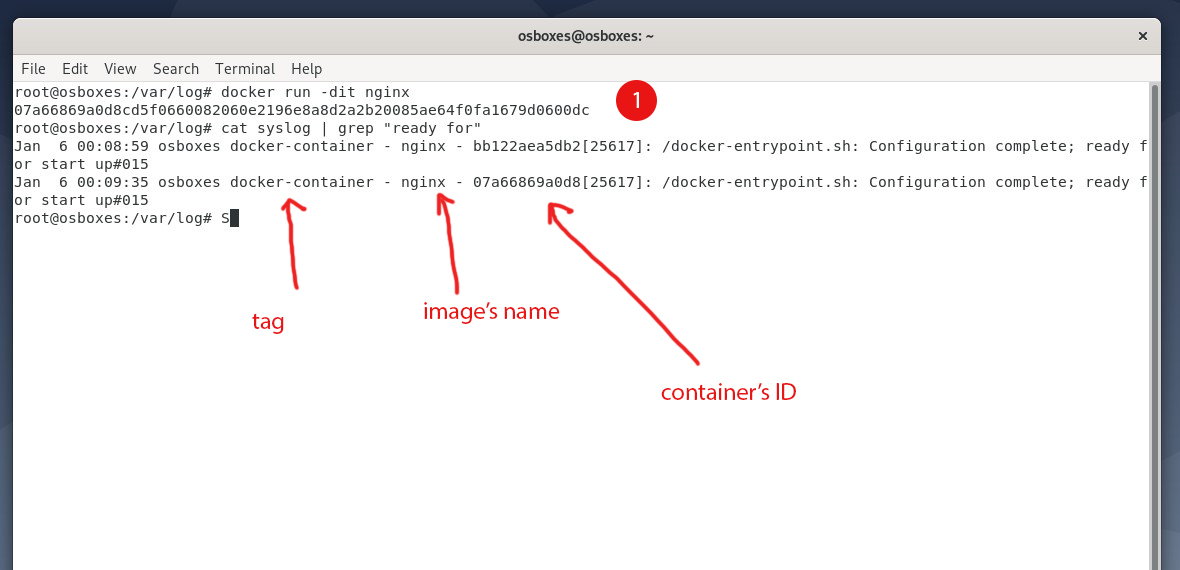

To make sure everything is working, let’s create a new container:

This time, if you try to read the logs from the container using the log command, you’ll get an error:

So, to read the logs from the container, you can go to the file generated by rsyslog:

Both the daemon and container file logs are there. Let’s look at each one of them:

Docker Syslog

As you can see, the name of the files and the log format complies with the structure I defined in the rsyslog configuration.

And that’s how you can configure the Docker daemon to send logs to a syslog server.

Docker Syslog

There’s a Missing Piece

What’s the missing piece I previously mentioned? Well, once logs land at the syslog server, you need to do something with them. Even if logs are now centralized and it doesn’t matter if the container source of logging goes away, you still need to have a better way of reading logs. Going to a server and exploring logs manually with a grep or tail command won’t scale (in addition to being notoriously dynamic, containers and their logs tend to be quite prolific). What if you use syslog as an intermediary location? For instance, once you collect logs, you can send them to a system in which to run search queries, identify patterns, or create dashboards and alerts.

Using SolarWinds® Loggly®, there are several ways you can process logs. For instance, you could have an agent running in the syslog server or sidecar container forwarding the logs to Loggly. In Loggly, you can then analyze the logs you’re collecting from your systems, even if they’re not running in containers. Give it a try in Loggly today.

Loggly

See unified log analysis and monitoring for yourself.This post was written by Christian Meléndez. Christian is a technologist who started as a software developer and has more recently become a cloud architect focused on implementing continuous delivery pipelines with applications in several flavors, including .NET, Node.js, and Java, often using Docker containers.